AI History & Overview

The world of AI is accelerating faster each day. It can be overwhelming and confusing to keep up with all of the advancements. This content was assembled to provide you with a high-level understanding of the space.

Foundational Definition:

Artificial intelligence (AI) at its core, is intelligence demonstrated by machines, as opposed to intelligence of humans and other animals.

Brief History of AI:

While the study of mechanical or “formal” reasoning began with philosophers and mathematicians in antiquity, modern technological AI found its beginning in the early 20th century:

- 1940s – McCullouch and Pitts’ 1943 established the formal design for Turing-complete “artificial neurons”.

- 1950s – Two approaches to AI emerged (Symbolic and Connectionist). The field of AI research was born at a workshop at Dartmouth College; the attendees became the founders and leaders of AI research, producing programs that learned checkers’ strategies, solving word problems in algebra, proving logical theorems, and speaking English.

- 1960s – Research in the U.S. was heavily funded by the Department of Defense and laboratories established worldwide.

- 1970s – Due to slow progress and competing governmental priorities, funding was cut off and an AI winter ensued.

- 1980s – AI development picked up again and the concept of neural networks was born but a second winter arrived in the late eighties.

- 1990s – Narrow AI started to become prevalent as researchers successfully applied AI to specific tasks.

- 2010s – Significant AI research efforts were being led by technology companies like Google.

- 2020s – Massive amounts of scalable computing power paired with data began to demonstrate the power and capability of AI.

Today, AI covers many different use cases and is comprised of six different sub-disciplines:

- Machine Learning (ML)

- Natural Language Processing (NLP)

- Speech Recognition

- Machine Vision

- Robotics

- Expert Systems

Image Source: Flow Labs

1. Machine Learning

Machine learning is a broad subset of artificial intelligence that enables computers to learn from data and experience without being explicitly programmed.

Image Source: LinkedIn

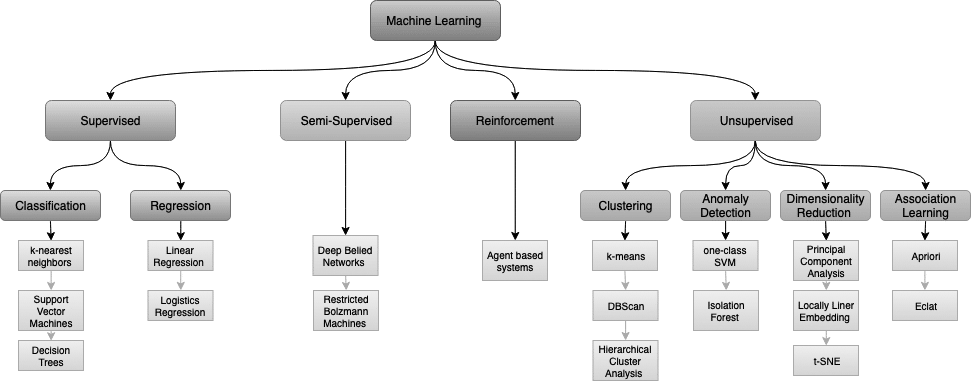

Machine learning algorithms can be divided into multiple categories: supervised, semi-supervised, reinforcement and unsupervised.

Supervised Learning algorithms require a training dataset that includes both the input data and the desired output. Supervised learning can be further segmented into regression and classification algorithms. Classification algorithms are used to identify patterns and group data while regression algorithms are used to predict outcomes.

Semi-Supervised Learning is when most of the training data are unlabeled while the balance few are labeled. So most of the Semi Supervised Learning algorithms consist of a combination of Supervised and Unsupervised algorithms.

Reinforcement Learning is a type of machine learning that is used to create a model of how to behave in a particular situation. This type of learning is used to create models of how to behave in order to achieve a particular goal. Here the system is exposed to a situation where it can take an action. According to the action taken, it will be rewarded or penalized. The system then updates it’s policy with the actions it should and shouldn’t take. This will be continued until it finds the optimal action for a situation.

Unsupervised Learning does not require a training dataset, and instead rely on data to “learn” on its own. This is the total opposite of Supervised Learning. Here the training data does not include labels. The system has to learn on its own. It is mainly contains with techniques that involves the grouping of data points.

Machine learning itself has several subsets of AI within it, including neural networks and deep learning.

Image Source: IBM

Neural Networks

Deep neural networks are a type of machine learning that is used to create a model of the world. This type of learning is used to create models of data, including images, text, and other types of data. It is used to create a “deep” or complex model of the data. Neural networks are a subset of AI that are used to create software that can learn and make decisions like humans. Artificial neural networks are composed of many interconnected processing nodes, or neurons, that can learn to recognize patterns, akin to the human brain.

One of the advantages of neural networks is that they can be trained to recognize patterns in data that are too complex for traditional computer algorithms. While traditional computer programs are deterministic, neural networks, like all other forms of machine learning, are probabilistic and can handle far greater complexity in decision-making.

The probabilistic nature of neural networks is what makes them so powerful. With enough computing power and labeled data, neural networks can solve for a huge variety of tasks. One of the challenges of using neural networks is that they have limited interpretability, so they can be difficult to understand and debug. Neural networks are also sensitive to the data used to train them and can perform poorly if the data is not representative of the real world.

Deep Learning

Deep learning is a subset of machine learning. It has received a lot of attention in recent years because of the successes of deep learning networks in tasks such as computer vision, speech recognition, and self-driving cars. Deep learning networks are composed of layers of interconnected processing nodes, or neurons. The first layer, or the input layer, receives input from the outside world, such as an image or a sentence. The next layer processes the input and passes it on to the next layer, and so on. These intermediate layers are often referred to as hidden layers. At the final stage, the output layer results in a prediction or classification, such as the identification of a particular object in an image or the translation of a sentence from one language to another.

These networks are called “deep” because they comprise three or more neural network layers. The depth of a network is important because it allows the network to learn complex patterns in the data. Deep learning networks can learn to perform complex tasks by adjusting the strength of the connections between the neurons in each layer. This process is called “training.” The strength of the connections is determined by the data that is used to train the network. The more data that is used, the better the network will be at performing the task that it is trained to do.

One of the advantages of deep learning models is that they can be trained to recognize patterns in data that are too complex for humans to identify. This makes them well-suited for tasks such as image recognition and natural language processing. This is also what led to the modern explosion in AI applications, as deep learning as a field isn’t limited to specific tasks.

Image Source: IBM

2. Natural Language Processing (NLP)

NLP, or natural language processing, is a subset of artificial intelligence that deals with the understanding and manipulation of human language. It is a field of AI that has been around for a long time, but has become more popular in recent years due to the advancement of machine learning and deep learning. NLP is used in a variety of applications, such as text classification, sentiment analysis, and machine translation. It can also be used to create chatbots and personal assistants. Google Translate, Siri, Alexa, and all the other personal assistants are examples of applications that use NLP. These applications can understand and respond to human language, which is a very difficult task. NLP is used to process and interpret the text that is input into these applications.

3. Speech Recognition

Speech recognition is a technology which enables a machine to understand the spoken language and translate into a machine-readable format. It can also be said as automatic Speech recognition and computer speech recognition. It is a way to talk with a computer, and on the basis of that command, a computer can perform a specific task.

There is some speech recognition software which has a limited vocabulary of words and phrase. This software requires unambiguous spoken language to understand and perform specific task. Today there are various software or devices which contains speech recognition technology such as Cortana, Google virtual assistant, Apple Siri, etc. Speech recognition system need to be trained to understand language. In previous days, these systems were only designed to convert the speech to text, but now there are various devices which can directly convert speech into commands. Speech recognition systems can be used in system control or navigation systems, industrial applications and voice dialing systems. Speech systems can be speaker dependent or independent.

4. Machine Vision

Machine vision is an application of computer vision which enables a machine to recognize the object.

Machine vision captures and analyses visual information using one or more video cameras, analog-to-digital conversations, and digital signal processing. Machine vision systems are programmed to perform narrowly defined tasks such as counting objects, reading the serial number, etc. Computer systems do not see in the same way as human eyes can see, but it is also not bounded by human limitations such as to see through the wall. With the help of machine learning and machine vision, an AI agent can be able to see through walls.

5. Robotics

Most AI systems are never deployed in a physical form. They exist only as lines of code, processing data and making decisions. But there is a small subset of AI systems that are deployed in a physical form: Robotics systems. Robotics systems are a type of AI system that are used to control physical objects in the world. These are built with both supervised learning and unsupervised learning.

There are a few different types of robotics systems. The most common type of robotics system is the industrial robotics system. Industrial robotics systems are used for the automation of manufacturing processes. They are typically used to perform tasks that are dangerous, dirty, or dull. Robotics computer systems are already saving the lives of human beings and extending careers.

Another type of robotics system is the service robotics system. Service robotics systems are used to automate tasks that are performed by humans. They are typically used to assist humans with tasks that are difficult or dangerous, from healthcare to defense.

A third type of robotics system is the military robotics system. Military robotics systems are used to automate or augment tasks that are performed by soldiers.

Despite the criticism, researchers argue that autonomous robotic military systems may be capable of actually reducing civilian casualties. Humanity, not robots, has a dismal ethical track record when it comes to choosing targets during wartime. That said, this is no statement of support for wide-scale military adoption of robotics systems. Many experts have raised concerns about the proliferation of these weapons and the implications for global peace and security.

6. Expert Systems

Expert systems are the computer programs that rely on obtaining the knowledge of human experts and programming that knowledge into a system. Expert systems emulate the decision-making ability of human experts. These systems are designed to solve the complex problem through bodies of knowledge rather than conventional procedural code. One of the examples of an expert system is a Suggestion for the spelling error while typing in the Google search box. It performs this by extracting knowledge from its knowledge base using the reasoning and inference rules according to the user queries. Expert systems are high performance, reliable, highly responsive and understandable.

AI INFLUENCERS

AI MODELS

Popular Large Language Models

ALPACA (Stanford)

BARD (Google)

Gemini (Google)

GPT (OpenAI)

LLaMA (Meta)

Mixtral 8x7B (Mistral)

PaLM-E (Google)

VICUNA (Fine Tuned LLaMA)

Popular Image Models

Stable Diffusion (StabilityAI)

Leaderboards

NOTABLE AI APPS

Chat

Image Generation / Editing

Audio / Voice Generation

Video Generation

DAILY LINKS TO YOUR INBOX

EMAIL: [email protected]

©2024 The Horizon